MCP March Madness

Announcing the first MCP March Madness Tournament!

This month, we're hosting a face-off like you've never seen before. If "AI Athletes" wasn't on your 2025 bingo card, don't worry... it's not quite like that.

We're putting the best of today's API-driven companies head-to-head in a matchup to see how their MCP servers perform when tool-equipped Large Language Models (LLMs) are tasked with a series of challenges.

How It Works

Each week in March, we will showcase two competing companies in a test to see how well AI can use their product through API interactions.

This will involve a Task that describes specific work requiring the use of the product via its API.

For example:

"Create a document titled 'World Populations' and add a table containing the world's largest countries including their name, populations, and ranked by population size. Provide the URL to this new document in your response."

In this example matchup, we'd run this exact same Task twice - first using Notion tools, and in another run, Google Docs. We'll compare the outputs, side-effects, and the time spent executing. Using models from Anthropic and OpenAI, we'll record each run so you can verify our findings!

We're keeping these tasks fairly simple to test the model's accuracy when calling a small set of tools. But don't worry - things will get spicier in the Grand Finale!

Additionally, we're releasing our first evaluation framework for MCP-based tool calling, which we'll use to run these tests. We really want to exercise the tools and prompts as fairly as possible, and we'll publish all evaluation data for complete transparency.

Selecting the MCPs

As a prerequisite, we're using MCPs available on our public registry at www.mcp.run. This means they may not have been created directly by the API providers themselves. Anyone is able to publish MCP servlets (WebAssembly-based, secure & portable MCP Servers) to the registry. However, to ensure these matchups are as fair as possible, we're using servlets that have been generated from the official OpenAPI specifications provided by each company.

MCP Servers [...] generated from the official OpenAPI specification provided by each company.

Wait, did I read that right?

Yes, you read that right! We'll be making this generator available later this month... so sign up and follow-along for that announcement.

So, this isn't just a test of how well AI can use an API - it's also a test of how comprehensive and well-designed a platform's API specification is!

NEW: Our Tool Use Eval Framework

As mentioned above, we're excited to share our new evaluation framework for LLM tool calling!

mcpx-eval is a framework for evaluating LLM tool calling using mcp.run tools.

The primary focus is to compare the results of open-ended prompts, such as

mcp.run Tasks. We're thrilled to provide this resource to help users make

better-informed decisions when selecting LLMs to pair with mcp.run tools.

If you're interested in this kind of technology, check out the repository on GitHub, and read our full announcement for an in-depth look at the framework.

The Grand Finale

After 3 rounds of 1-vs-1 MCP face-offs, we're taking things up a notch. We'll put the winning MCP Servers on one team and the challengers on another. We'll create a new Task with more sophisticated work that requires using all 3 platforms' APIs to complete a complex challenge.

May the best man MCP win!

2025 Schedule

Round 1: Supabase vs. Neon

📅 March 5, 2025

We put two of the best Postgres platforms head-to-head to kick-off MCP March Madness! Watch the matchup in realtime, executing a Task to ensure a project is ready to use and to generate and execute the SQL needed to set up a database to manage our NewsletterOS application.

Here's the prompt:

Pre-requisite:

- a database and or project to use inside my account

- name: newsletterOS

Create the tables necessary to act as the primary transactional database for a Newsletter Management System, where its many publishers manage the creation of newsletters and the subscribers to each newsletter.

I expect to be able to work with tables of information including data on:

- publisher

- subscribers

- newsletters

- subscriptions (mapping subscribers to newsletters)

- newsletter_release (contents of newsletter, etc)

- activity (maps publisher to a enum of activity types & JSON)

Execute the necessary queries to set my database up with this schema.

In each Task, we attach the Supabase and Neon mcp.run servlets to the prompt, giving our Task access to manage those respective accounts on our behalf via their APIs.

See how Supabase handles our Task as Claude Sonnet 3.5 uses MCP server tools:

Next, see how Neon handles the same Task, leveraging Claude Sonnet 3.5 and the Neon MCP server tools we generated from their OpenAPI spec.

The results are in... and the winner is...

🏆 Supabase 🏆

Summary

Unfortunately, Neon was unable to complete the Task as-is, using only its functionality exposed via their official OpenAPI spec. But, they can (and hopefully will!) make it so an OpenAPI consumer can run SQL this way. As noted in the video, their hand-writen MCP Server does support this. We'd love to make this as feature-rich on mcp.run so any Agent or AI App in any language or framework (even running on mobile devices!) can work as seamlessly.

Eval Results

In addition to the Tasks we ran, we also executed this prompt with our own eval

framework mcpx-eval. We configure this

eval using the following, and when it runs we provide the profile where the

framework can load and call the right tools:

name = "neon-vs-supabase"

max-tool-calls = 100

prompt = """

Pre-requisite:

- a database and or project to use inside my account

- name: newsletterOS

Create the tables and any seed data necessary to act as the primary transactional database for a Newsletter Management System, where its many publishers manage the creation of newsletters and the subscribers to each newsletter.

Using tools, please create tables for:

- publisher

- subscribers

- newsletters

- subscriptions (mapping subscribers to newsletters)

- newsletter_release (contents of newsletter, etc)

- activity (maps publisher to a enum of activity types & JSON)

Execute the necessary commands to set my database up for this.

When all the tables are created, output the queries to describe the database.

"""

check="""

Use tools and the output of the LLM to check that the tables described in the <prompt> have been created.

When selecting tools you should never in any case use the search tool.

"""

ignore-tools = [

"v1_create_a_sso_provider",

"v1_update_a_sso_provider",

]

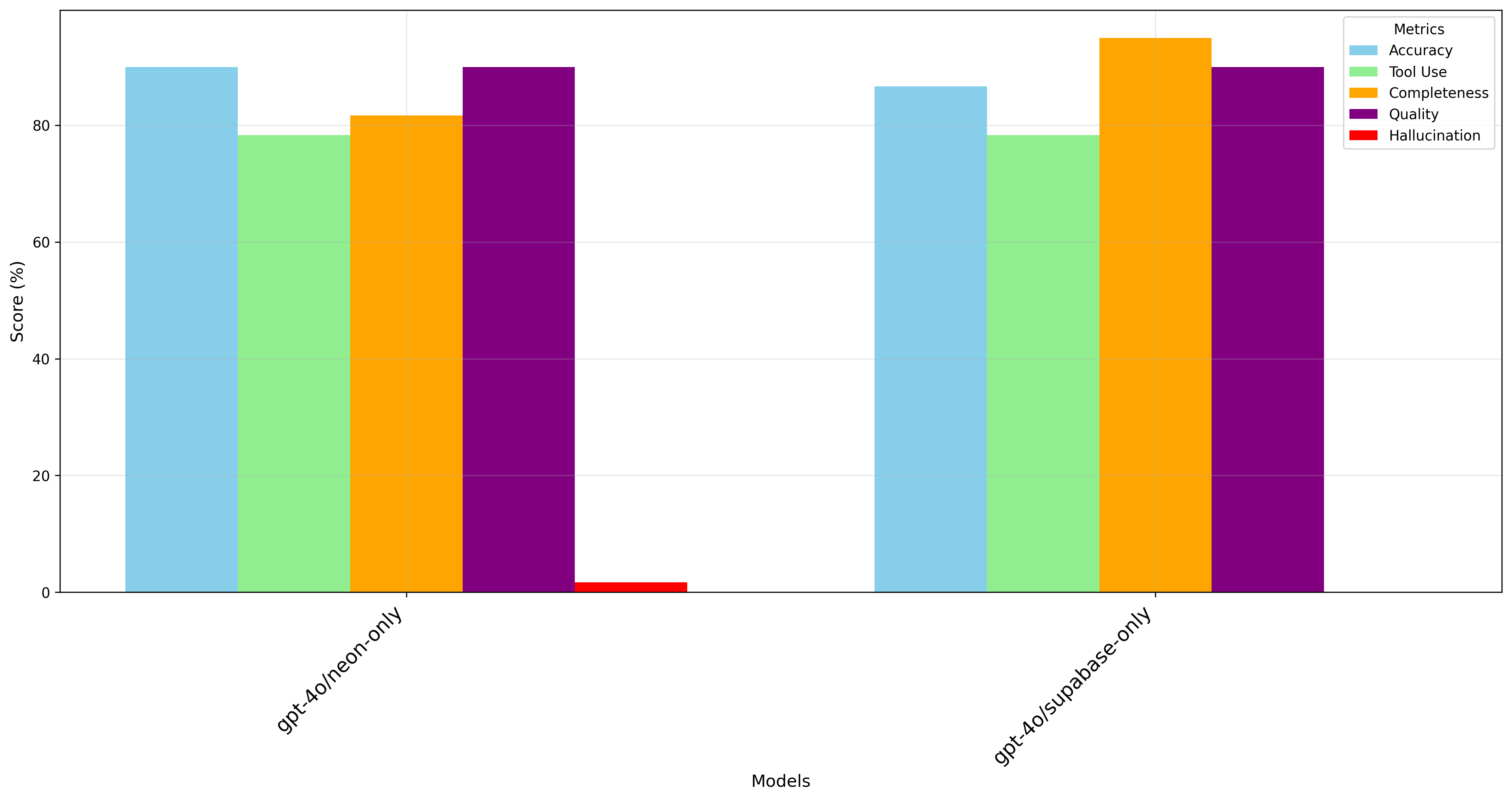

Supabase vs. Neon (with OpenAI GPT-4o)

Neon (left) outperforms Supabase (right) on the accuracy dimension by a few points - likely due to better OpenAPI spec descriptions, and potentially more specific endpoints. These materialize as tool calls and tool descriptions, which are provided as context to the inference run, and make a big difference.

In all, both platforms did great, and if Neon adds query execution support via OpenAPI, we'd be very excited to put it to use.

Next up!

Stick around for another match-up next week... details below ⬇️

Round 2: Resend vs. Loops

📅 March 12, 2025

Everybody loves email, right? Today we're comparing some popular email platforms to see how well their APIs are designed for AI usage. Can an Agent or AI app successfully carry out our task? Let's see!

Here's the prompt:

Unless it already exists, create a new audience for my new newsletter called: "{{ audience }}"

Once it is created, add a test contact: "{{ name }} {{ email }}".

Then, send that contact a test email with some well-designed email-optimized HTML that you generate. Make the content and the design/theme relevant based on the name of the newsletter for which you created the audience.

Notice how we have parameterized this prompt with replacement parameters! This allows mcp.run Tasks to be dynamically updated with values - especially helpful when triggering them from an HTTP call or Webhook.

In each Task, we attach the Resend and Loops mcp.run servlets to the prompt, giving our Task access to manage those respective accounts on our behalf via their APIs.

See how Resend handles our Task using its MCP server tools:

Next, see how Loops handles the same Task, leveraging the Loops MCP server tools we generated from their OpenAPI spec.

The results are in... and the winner is...

🏆 Resend 🏆

Summary

Similar to Neon in Round 1, Loops was unable to complete the Task as-is, using only its functionality exposed via their official OpenAPI spec. Hopefully they add the missing API surface area to enable an AI application or Agent to send transactional email along with a new template on the fly.

Resend was clearly designed to be extremely flexible, and the model was able to figure out exactly what it needed to do in order to perfectly complete our Task.

Eval Results

In addition to the Tasks we ran, we also executed this prompt with our own eval

framework mcpx-eval. We configure this

eval using the following, and when it runs we provide the profile where the

framework can load and call the right tools:

name = "loops-vs-resend"

prompt = """

Unless it already exists, create a new audience for my new newsletter called: "cat-facts"

Once it is created, add a test contact: "Zach [email protected]"

Then, send that contact a test email with some well-designed email-optimized HTML that you generate. Make the content and the design/theme relevant based on the name of the newsletter for which you created the audience.

"""

check="""

Use tools to check that the audience exists and that the email was sent correctly

"""

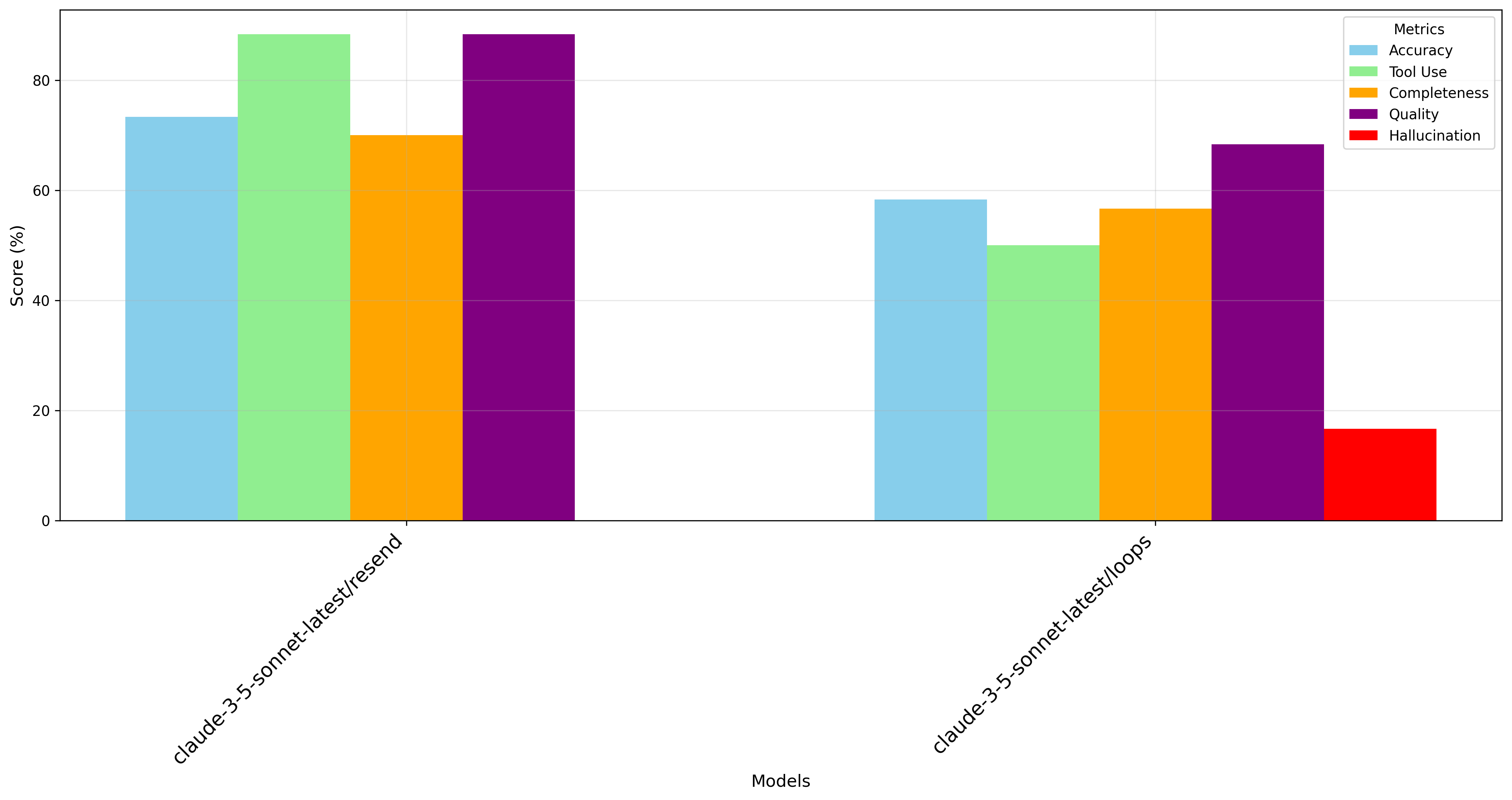

Resend vs. Loops (with OpenAI GPT-4o)

Resend (left) outperforms Loops (right) accross the board. In part due to Loops missing functionality to complete the task, but also likely that Resend's OpenAPI spec is extremely comprehensive and includes very rich descriptions and detail.

Remember, all of this makes its way into the context of the inference request, and influences how the model decides to respond with a tool request. The better your descriptions, the more accurately the model will use your tool!

Next up!

Stick around for another match-up next week... details below ⬇️

Round 3: Perplexity vs. Brave Search

📅 March 19, 2025

If you're looking for something like DeepResearch, without the "PhD-level reasoning" or the price tag that goes along with it, then this is the round for you!

Perplexity is a household name, and packs a punch for sourcing relevant and recent information on any subject. Through its Sonar API, we can programmatically make our way through the web. Brave exposes its powerful, more traditional search engine via API. Which one can deliver the best results for us when asked to find recent news and information about a given topic?

Here's the prompt:

We need to find the latest, most interesting and important news for people who have subscribed to our "{{ topic }}" newsletter.

To do this, search the web for news and information about {{ topic }}, do many searches for newly encountered & highly related terms, associated people, and other related insights that would be interesting to our subscribers.

These subscribers are very aware of {{ topic }} space and what is happening, so when we find a good source on the web, also add some intellegent & researched prose around the article or content. Limit this to just a sentence or two, and include it in the output you provide.

Output all of the links you find on the web and your expert additional prose in a Markdown format so it can be read and approved by a 3rd party.

Notice how we have parameterized this prompt with replacement parameters! This allows mcp.run Tasks to be dynamically updated with values - especially helpful when triggering them from an HTTP call or Webhook.

In each Task, we attach the Perplexity and Brave Search mcp.run servlets to the prompt, giving our Task access to manage those respective accounts on our behalf via their APIs.

This round, we've combined the Task runs into a single video. Check them out:

Content Output

Here's the full, rendered output from each of the Tasks run in the video. What do you think, which did a better job finding us results for "AI Agent" news to include in a newsletter?

- Perplexity

- Brave Search

AI Agents Newsletter: Latest Developments - March 2025

NVIDIA's Game-Changing AI Agent Infrastructure

NVIDIA AI-Q Blueprint and AgentIQ Toolkit

NVIDIA unveiled AI-Q, a comprehensive Blueprint for developing agentic systems that's reshaping how enterprises build AI agents. The framework integrates NVIDIA's accelerated computing with partner storage platforms and software tools.

The AI-Q Blueprint represents NVIDIA's strategic move to dominate the enterprise AI agent infrastructure market, positioning them as the essential foundation for companies building sophisticated agent systems.

Llama Nemotron Model Family

NVIDIA launched the Llama Nemotron family of open reasoning AI models designed specifically for agent development. Available in three sizes (Nano: 8B, Super: 49B, and Ultra: 253B parameters), these models offer advanced reasoning capabilities with up to 20% improved accuracy over base Llama models.

These models are particularly significant as they offer hybrid reasoning capabilities that let developers toggle reasoning on/off to optimize token usage and costs—a critical feature for enterprise deployment that could accelerate adoption.

Enterprise AI Agent Adoption

Industry Implementation Examples

- Yum Brands is deploying voice ordering AI agents in restaurants, with plans to roll out to 500 locations this year.

- Visa is using AI agents to streamline cybersecurity operations and automate phishing email analysis.

- Rolls-Royce has implemented AI agents to assist service desk workers and streamline operations.

While these implementations show promising use cases, the ROI metrics remain mixed—only about a third of C-suite leaders report substantial ROI in areas like employee productivity (36%) and cost reduction, suggesting we're still in early stages of effective deployment.

Zoom's AI Companion Enhancements

Zoom introduced new agentic AI capabilities for its AI Companion, including calendar management, clip generation, and advanced document creation. A custom AI Companion add-on is launching in April at $12/user/month.

Zoom's approach of integrating AI agents directly into existing workflows rather than as standalone tools could be the key to avoiding the "productivity leak" problem, where 72% of time saved by AI doesn't convert to additional throughput.

Developer Tools and Frameworks

OpenAI Agents SDK

OpenAI released a new set of tools specifically designed for building AI agents, including a new Responses API that combines chat capabilities with tool use, built-in tools for web search, file search, and computer use, and an open-source Agents SDK for orchestrating single-agent and multi-agent workflows.

This release significantly lowers the barrier to entry for developers building sophisticated agent systems and could accelerate the proliferation of specialized AI agents across industries.

Eclipse Foundation Theia AI

The Eclipse Foundation announced two new open-source AI development tools: Theia AI (an open framework for integrating LLMs into custom tools and IDEs) and an AI-powered Theia IDE built on Theia AI.

As an open-source alternative to proprietary development environments, Theia AI could become the foundation for a new generation of community-driven AI agent development tools.

Research Breakthroughs

Multi-Agent Systems

Recent research has focused on improving inter-agent communication and cooperation, particularly in autonomous driving systems using LLMs. The development of scalable multi-agent frameworks like Nexus aims to make MAS development more accessible and efficient.

The shift toward multi-agent systems represents a fundamental evolution in AI agent architecture, moving from single-purpose tools to collaborative systems that can tackle complex, multi-step problems.

SYMBIOSIS Framework

Cabrera et al. (2025) introduced the SYMBIOSIS framework, which combines systems thinking with AI to bridge epistemic gaps and enable AI systems to reason about complex adaptive systems in socio-technical contexts.

This framework addresses one of the most significant limitations of current AI agents—their inability to understand and navigate complex social systems—and could lead to more contextually aware and socially intelligent agents.

Ethical and Regulatory Developments

EU AI Act Implementation

The EU AI Act, expected to be fully implemented by 2025, introduces a risk-based approach to regulating AI with stricter requirements for high-risk applications, including mandatory risk assessments, human oversight mechanisms, and transparency requirements.

As the first comprehensive AI regulation globally, the EU AI Act will likely set the standard for AI agent governance worldwide, potentially creating compliance challenges for companies operating across borders.

Industry Standards Emerging

Organizations like ISO/IEC JTC 1/SC 42 and the NIST AI Risk Management Framework are developing guidelines for AI governance, including specific considerations for autonomous agents.

These standards will be crucial for establishing common practices around AI agent development and deployment, potentially reducing fragmentation in approaches to AI safety and ethics.

This newsletter provides a snapshot of the rapidly evolving AI Agent landscape. As always, we welcome your feedback and suggestions for future topics.

AI Agents Newsletter: Latest Developments and Insights

The Rise of Autonomous AI Agents in 2025

IBM Predicts the Year of the Agent

According to IBM's recent analysis, 2025 is shaping up to be "the year of the AI agent." A survey conducted with Morning Consult revealed that 99% of developers building AI applications for enterprise are exploring or developing AI agents. This shift from passive AI assistants to autonomous agents represents a fundamental evolution in how AI systems operate and interact with the world.

IBM's AI Agents 2025: Expectations vs. Reality

IBM's research highlights a crucial distinction between today's function-calling models and truly autonomous agents. While many companies are rushing to adopt agent technology, IBM cautions that most organizations aren't yet "agent-ready" - the real challenge lies in exposing enterprise APIs for agent integration.

China's Manus: A Revolutionary Autonomous Agent

In a significant development, Chinese researchers launched Manus, described as "the world's first fully autonomous AI agent." Manus uses a multi-agent architecture where a central "executor" agent coordinates with specialized sub-agents to break down and complete complex tasks without human intervention.

Forbes: China's Autonomous Agent, Manus, Changes Everything

Manus represents a paradigm shift in AI development - not just another model but a truly autonomous system capable of independent thought and action. Its ability to navigate the real world "as seamlessly as a human intern with an unlimited attention span" signals a new era where AI systems don't just assist humans but can potentially replace them in certain roles.

MIT Technology Review's Hands-On Test of Manus

MIT Technology Review recently tested Manus on several real-world tasks and found it capable of breaking tasks down into steps and autonomously navigating the web to gather information and complete assignments.

MIT Technology Review: Everyone in AI is talking about Manus. We put it to the test.

While experiencing some system crashes and server overload, MIT Technology Review found Manus to be highly intuitive with real promise. What sets it apart is the "Manus's Computer" window, allowing users to observe what the agent is doing and intervene when needed - a crucial feature for maintaining appropriate human oversight.

Multi-Agent Systems: The Power of Collaboration

The Evolution of Multi-Agent Architectures

Research into multi-agent systems (MAS) is accelerating, with multiple AI agents collaborating to achieve common goals. According to a comprehensive analysis by data scientist Sahin Ahmed, these systems are becoming increasingly sophisticated in their ability to coordinate and solve complex problems.

Medium: AI Agents in 2025: A Comprehensive Review

The multi-agent approach is proving particularly effective in scientific research, where specialized agents handle different aspects of the research lifecycle - from literature analysis and hypothesis generation to experimental design and results interpretation. This collaborative model mirrors effective human teams and is showing promising results in fields like chemistry.

The Oscillation Between Single and Multi-Agent Systems

IBM researchers predict an interesting oscillation in agent architecture development. As individual agents become more capable, there may be a shift from orchestrated workflows to single-agent systems, followed by a return to multi-agent collaboration as tasks grow more complex.

IBM's Analysis of Agent Architecture Evolution

This back-and-forth evolution reflects the natural tension between simplicity and specialization. While a single powerful agent might handle many tasks, complex problems often benefit from multiple specialized agents working in concert - mirroring how human organizations structure themselves.

Development Platforms and Tools for AI Agents

OpenAI's New Agent Development Tools

OpenAI recently released new tools designed to help developers and enterprises build AI agents using the company's models and frameworks. The Responses API enables businesses to develop custom AI agents that can perform web searches, scan company files, and navigate websites.

TechCrunch: OpenAI launches new tools to help businesses build AI agents

OpenAI's shift from flashy agent demos to practical development tools signals their commitment to making 2025 the year AI agents enter the workforce, as proclaimed by CEO Sam Altman. These tools aim to bridge the gap between impressive demonstrations and real-world applications.

Top Frameworks for Building AI Agents

Analytics Vidhya has identified seven leading frameworks for building AI agents in 2025, highlighting their key components: agent architecture, environment interfaces, integration tools, and monitoring capabilities.

Analytics Vidhya: Top 7 Frameworks for Building AI Agents in 2025

These frameworks provide standardized approaches to common challenges in AI agent development, allowing developers to focus on the unique aspects of their applications rather than reinventing fundamental components. The ability to create "crews" of AI agents with specific roles is particularly valuable for tackling multifaceted problems.

Agentforce 2.0: Enterprise-Ready Agent Platform

Agentforce 2.0, scheduled for full release in February 2025, offers businesses customizable agent templates for roles like Service Agent, Sales Rep, and Personal Shopper. The platform's advanced reasoning engine enhances agents' problem-solving capabilities.

Medium: A List of AI Agents Set to Dominate in 2025

Agentforce's approach of providing ready-made templates while allowing extensive customization strikes a balance between accessibility and flexibility. This platform exemplifies how agent technology is being packaged for enterprise adoption with minimal technical barriers.

Ethical Considerations and Governance

The Ethics Debate Sparked by Manus

The launch of Manus has intensified debates about AI ethics, security, and oversight. Margaret Mitchell, Hugging Face Chief Ethics Scientist, has called for stronger regulatory action and "sandboxed" environments to ensure agent systems remain secure.

Forbes: AI Agent Manus Sparks Debate On Ethics, Security And Oversight

Mitchell's research asserts that completely autonomous AI agents should be approached with caution due to potential security vulnerabilities, diminished human oversight, and susceptibility to manipulation. As AI's capabilities grow, so does the necessity of aligning it with human ethics and establishing appropriate governance frameworks.

AI Governance Trends for 2025

Following the Paris AI Action Summit, several key governance trends have emerged for 2025, including stricter AI regulations, enhanced transparency requirements, and more robust risk management frameworks.

GDPR Local: Top 5 AI Governance Trends for 2025

The summit emphasized "Trust as a Cornerstone" for sustainable AI development. Interestingly, AI is increasingly being used to govern itself, with automated compliance tools monitoring AI models, verifying regulatory alignment, and detecting risks in real-time becoming standard practice.

Market Projections and Industry Impact

The Economic Impact of AI Agents

Deloitte predicts that 25% of enterprises using Generative AI will deploy AI agents by 2025, doubling to 50% by 2027. This rapid adoption is expected to create significant economic value across multiple sectors.

WriteSonic: Top AI Agent Trends for 2025

By 2025, AI systems are expected to evolve into collaborative networks that mirror effective human teams. In business contexts, specialized AI agents will work in coordination - analyzing market trends, optimizing product development, and managing customer relationships simultaneously.

Forbes Identifies Key AI Trends for 2025

Forbes has identified five major AI trends for 2025, with autonomous AI agents featuring prominently alongside open-source models, multi-modal capabilities, and cost-efficient automation.

Forbes: The 5 AI Trends In 2025: Agents, Open-Source, And Multi-Model

The AI landscape in 2025 is evolving beyond large language models to encompass smarter, cheaper, and more specialized solutions that can process multiple data types and act autonomously. This shift represents a maturation of the AI industry toward more practical and integrated applications.

Conclusion: The Path Forward

As we navigate the rapidly evolving landscape of AI agents in 2025, the balance between innovation and responsibility remains crucial. While autonomous agents offer unprecedented capabilities for automation and problem-solving, they also raise important questions about oversight, ethics, and human-AI collaboration.

The most successful implementations will likely be those that thoughtfully integrate agent technology into existing workflows, maintain appropriate human supervision, and adhere to robust governance frameworks. As IBM's researchers noted, the challenge isn't just developing more capable agents but making organizations "agent-ready" through appropriate API exposure and governance structures.

For businesses and developers in this space, staying informed about both technological advancements and evolving regulatory frameworks will be essential for responsible innovation. The year 2025 may indeed be "the year of the agent," but how we collectively shape this technology will determine its lasting impact.

This is a close one...

As noted in the recording, both Perplexity and Brave Search servlets did a great

job. It's difficult to say who wins off vibes alone... so let's use some 🧪

science! Leveraging mcpx-eval to help

us decide removes the subjective component of declaring a winner.

Eval Results

Here's the configuration for the eval we ran:

name = "perplexity-vs-brave"

prompt = """

We need to find the latest, most interesting and important news for people who have subscribed to our AI newsletter.

To do this, search the web for news and information about AI, do many searches for newly encountered & highly related terms, associated people, and other related insights that would be interesting to our subscribers.

These subscribers are very aware of AI space and what is happening, so when we find a good source on the web, also add some intellegent & researched prose around the article or content. Limit this to just a sentence or two, and include it in the output you provide.

Output all of the links you find on the web and your expert additional prose in a Markdown format so it can be read and approved by a 3rd party.

Only use tools available to you, do not use the mcp.run search tool

"""

check="""

Searches should be performed to collect information about AI, the result should be a well formatted and easily understood markdown document

"""

expected-tools = [

"brave-web-search",

"brave-image-search",

"perplexity-chat"

]

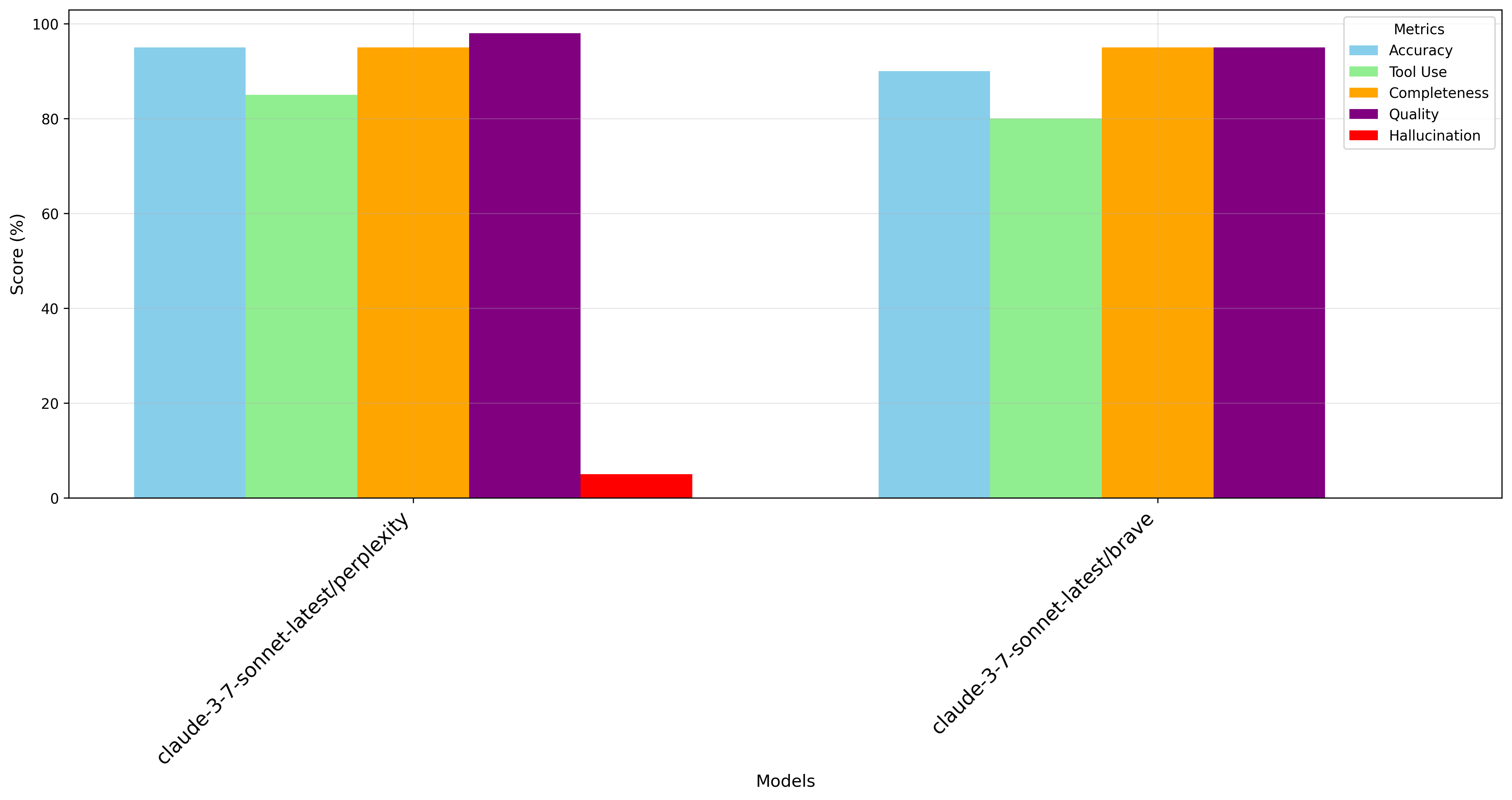

Perplexity vs. Brave (with Claude Sonnet 3.7)

Perplexity (left) outperforms Brave (right) on practically all dimensions, except that it does end up hallucinating every once in a while. This is a hard one to judge, but if we only look at the data, the results are in Perplexity's favor. We want to highlight that Brave did an incredible job here though, and for most search tasks, we would highly recommend it.

The results are in... and the winner is...

🏆 Perplexity 🏆

Next up!

We're on the road to the Grand Finale...

Originally, the Grand Finale was going to combine the top 3 MCP servlets and put them against the bottom 3. However, since Neon and Loops we unable to complete their tasks, we figured we'd do something a little bit more interesting.

Tune in next week to see a "headless application" at work. Combining Supabase, Resend and Perplexity MCPs to collectively carry out a sophisticated task.

Can we really put AI to work?

We'll find out next week!

...details below ⬇️

Grand Finale: 3 vs. 3 Showdown

📅 March 26, 2025

Without further ado, let's get right into it! The grand finale combines Supabase, Resend, and Perplexity into a mega MCP Task, that effectively produces an entire application that runs a newsletter management system, "newsletterOS".

See what we're able to accomplish with just a single prompt, using our powerful automation platform and the epic MCP tools attached:

Here's the prompt we used to run this Task:

Prerequisites:

- "newsletterOS" project and database

Create a new newsletter called {{ topic }} in my database. Also create an audience in Resend for {{ topic }}, and add a test subscriber contact to this audience:

name: Joe Smith

email: [email protected]

In the database, add this same contact as a subscriber to the {{ topic }} newsletter.

Now, find the latest, most interesting and important news for people who have subscribed to our {{ topic }} newsletter.

To do this, search the web for news and information about {{ topic }}, do many searches for newly encountered & highly related terms, associated people, and other related insights that would be interesting to our subscribers. Use 3 - 5 news items to include in the newsletter.

These subscribers are very aware of {{ topic }} space and what is happening, so when we find a good source on the web, also add some intellegent & researched prose around the article or content. Limit this to just a sentence or two, and include it in the output you provide.

Convert your output to email-optimized HTML so it can be rendered in common email clients. Then store this HTML into the newsletter release in the database. Send a test email from "[email protected]" using the same contents to our test subscriber contact for verification.

Keep in touch

We'll be putting out more content and demos, so please follow along for more announcements:

Follow @dylibso on X

Subscribe to @dylibso on Youtube

Thanks!

How to Follow Along

We'll publish a new post on this blog for each round and update this page with the results. To stay updated on all announcements, follow @dylibso on X.

We'll record and upload all matchups to our YouTube channel, so subscribe to watch the competitions as they go live.

Want to Participate?

Interested in how your own API might perform? Or curious about running similar evaluations on your own tools? Contact us to learn more about our evaluation framework and how you can get involved!

It should be painfully obvious, but we are in no way affiliated with the NCAA. Good luck to the college athletes in their completely separate, unrelated tournament this month!